Enhancing transparency and efficiency in clinical AI applications while building public trust in the use of artificial intelligence in healthcare

Mission Statement

The Center for Responsible AI in Healthcare was established to enhance transparency and efficiency in clinical applications and to build public trust and acceptance of artificial (AI) technologies in the healthcare field.

With the rapid advancement of AI, its applications in healthcare have expanded from disease diagnosis, risk prediction, and medical image interpretation to include clinical documentation support, significantly reducing the time healthcare professionals spend on writing medical records.

However, the adoption of AI is not without risks. Ensuring autonomy, accountability, privacy, transparency, security, fairness, and sustainability has become a critical global concern. Both the World Health Organization (WHO) and Taiwan’s draft Fundamental Act of Artificial Intelligence emphasize that without proper guidance, AI could lead to ethical dilemmas and regulatory challenges.

To address these concerns, Dr. Chien-Chang Lee, MD, ScD, Director of the Department of Information Management at the Ministry of Health and Welfare (MOHW), launched the Center for Responsible AI in Healthcare. The Center is dedicated to developing a governance framework that reflects seven core ethical principles, ensuring that ethical and safety considerations evolve concurrently with innovations in smart healthcare technologies.

Figure 1. The seven major ethical risks of AI in healthcare under insufficient regulation

Core Strategies

1. Governance Measures Aligned with Security and Privacy Protection Principles

- Privacy Protection

When AI applications involve the use of personal data, healthcare institutions must implement robust and comprehensive privacy protection mechanisms. Adherence to established frameworks such as the U.S. Health Insurance Portability and Accountability Act (HIPAA) or the EU General Data Protection Regulation (GDPR) is strongly recommended. Identifiable personal data should be removed or securely encrypted to reduce the risks of re-identification and data leakage.

In addition, institutions should clearly define the data storage location and retention period, specify access permissions for external administrators, and establish a routine audit mechanism to ensure proper implementation. For AI applications that require data to be uploaded to external cloud systems for inference, additional safeguards should be used, including advanced protection protocols and a detailed breach response. These measures collectively help maintain high standards of data security and regulatory compliance, especially during inter-institutional or cross-site data transmission.

- Information Security

When AI applications are hosted on external cloud platforms or managed by third parties, healthcare institutions must implement robust information security protocols to minimize the risk of system intrusions or data misuse.

Key recommendations include implementing multilayered cybersecurity defenses, such as firewalls and Intrusion Detection/Prevention Systems (IDS/IPS), to prevent unauthorized access; using network segmentation to limit access to sensitive systems and data; and conducting regular scans with automated vulnerability assessment tools. Additionally, the roles and access privileges of external administrators must be clearly defined. Institutions should implement periodic audits to ensure that security policies are consistently enforced and continuously refined in daily operations.

Figure 2. Three core pillars of AI implementation governance - Information security and privacy meet international standards

2. Transparency and Explainability

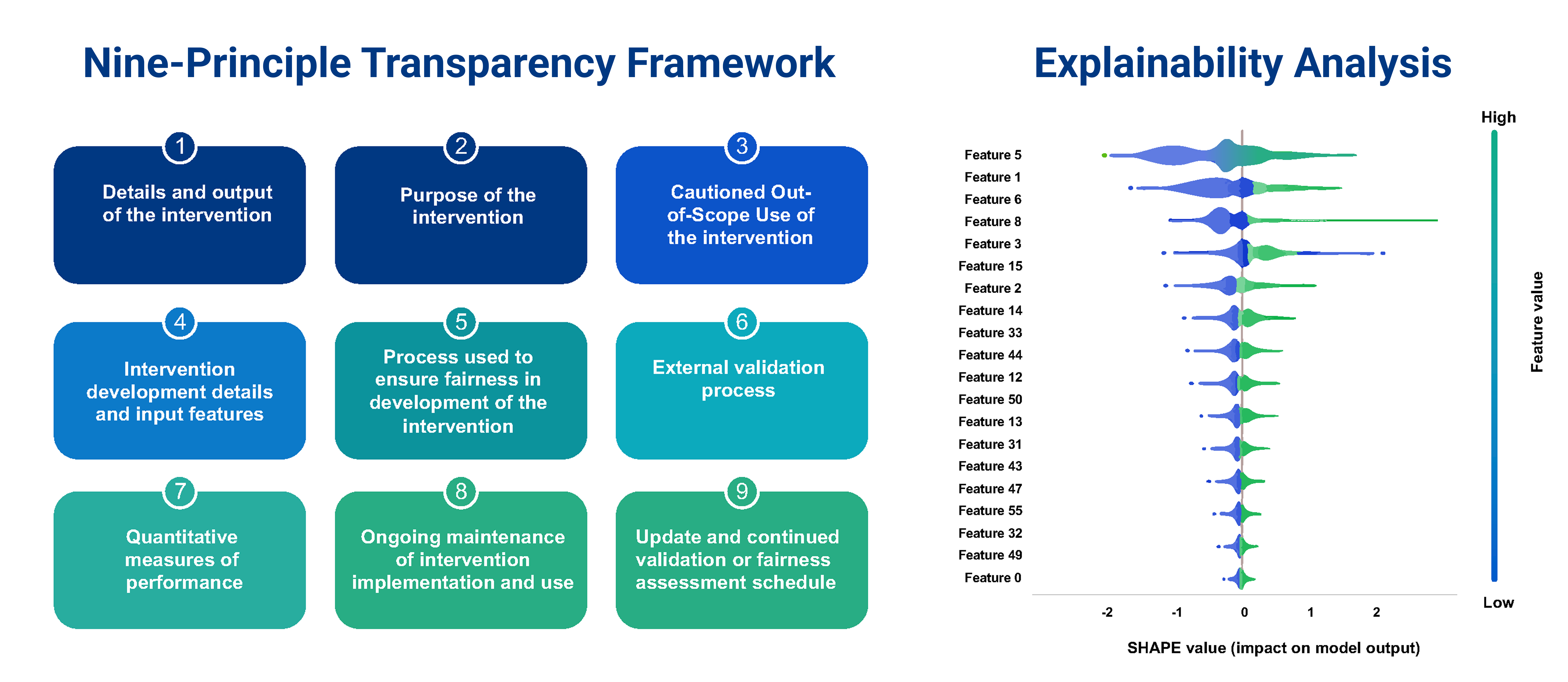

- Transparency Framework

Healthcare institutions deploying AI tools should ensure transparency by publicing disclosing essential information through dedicated online platforms. This includes the data sources used for training, model architecture, validation datasets, intended clinical use cases, and certification status from regulatory bodies such as the FDA or equivalent medical review authorities. These disclosures should align with a nine-principle transparency framework to promote accountability, trust, and informed decision-making among stakeholders.

- Explainability Analysis

Explainability techniques are crucial in clinical settings, as they help clarify how AI models make predictions or decisions to support physician interpretation and reinforce accountability. Tools like SHAP (SHapley Additive Explanations) and saliency maps offer insights into model behavior and decision logic, enhancing clinician confidence in AI outputs.

Figure 3. Three core pillars of AI implementation governance - Announcement transparency principle and explainability analysis

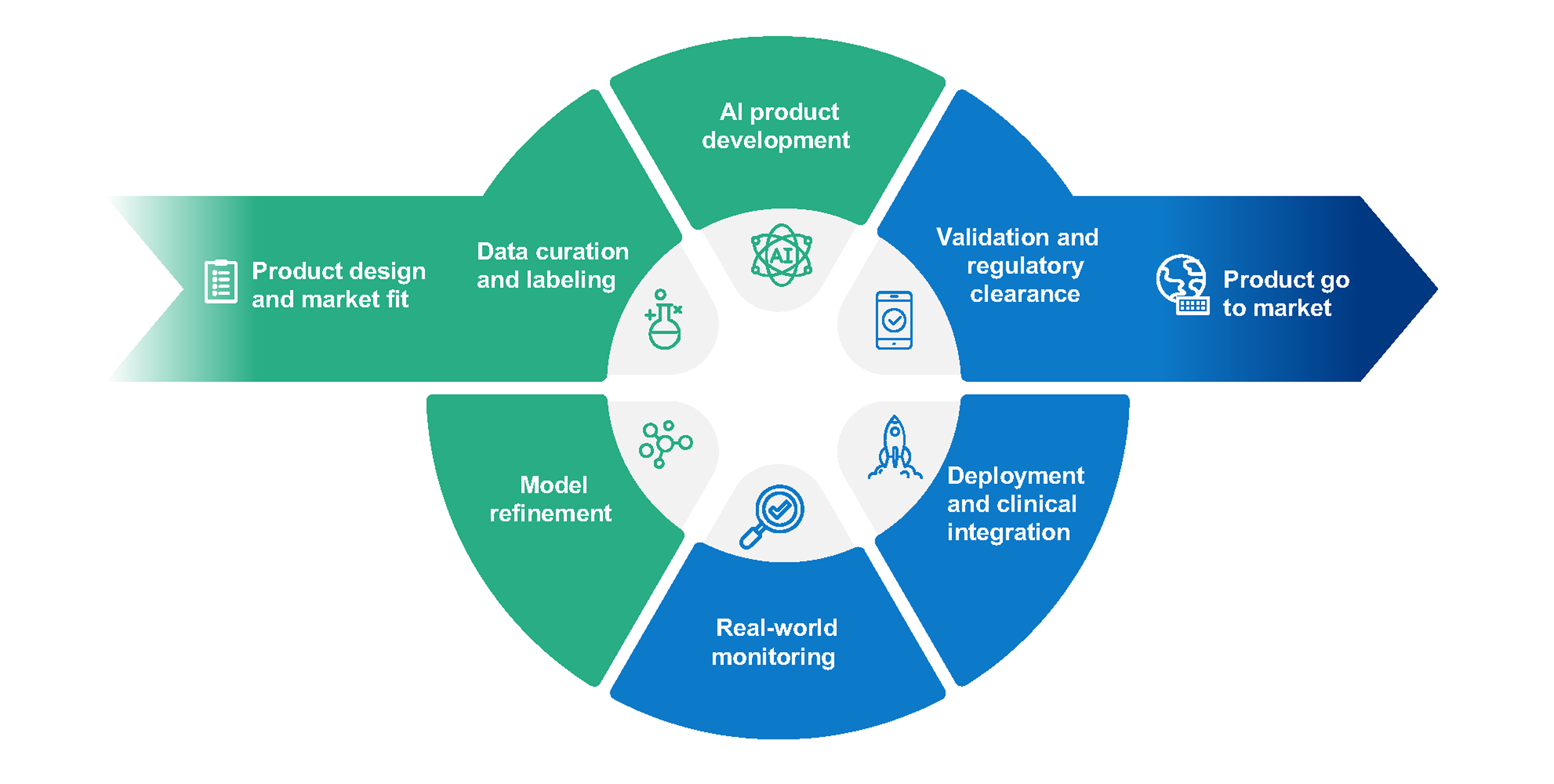

3. Monitoring AI Effectiveness Across the System Lifecycle

AI tools in clinical care require continuous monitoring and evaluation throughout their entire lifecycle, which includes not only development and deployment but also real world use. This includes conducting routine performance audits (e.g., every 6 to 12 months) using independent datasets to ensure the model maintains its accuracy, reliability, and relevance in response to changing medical data and clinical practices.

- Effectiveness Monitoring

For example, an AI model designed to detect breast cancer may have been trained on a large set of imaging data. However, once deployed in a clinical setting, it must undergo regular evaluations d to ensure sustained diagnostic accuracy, reliability, and fairness. Ongoing monitoring should include bias detection and correction, as well as comparative analysis with expert radiologist interpretationsto validate clinical effectiveness

- Retraining and Optimization

As new mammogram images are collected, the model must be periodically retrained to maintain high performance. Feedback from clinicians should be gathered to identify potential issues, which then inform system updates and model improvements.

Figure 4. Three core pillars of AI implementation governance-AI Lifecycle Management

Future Outlook

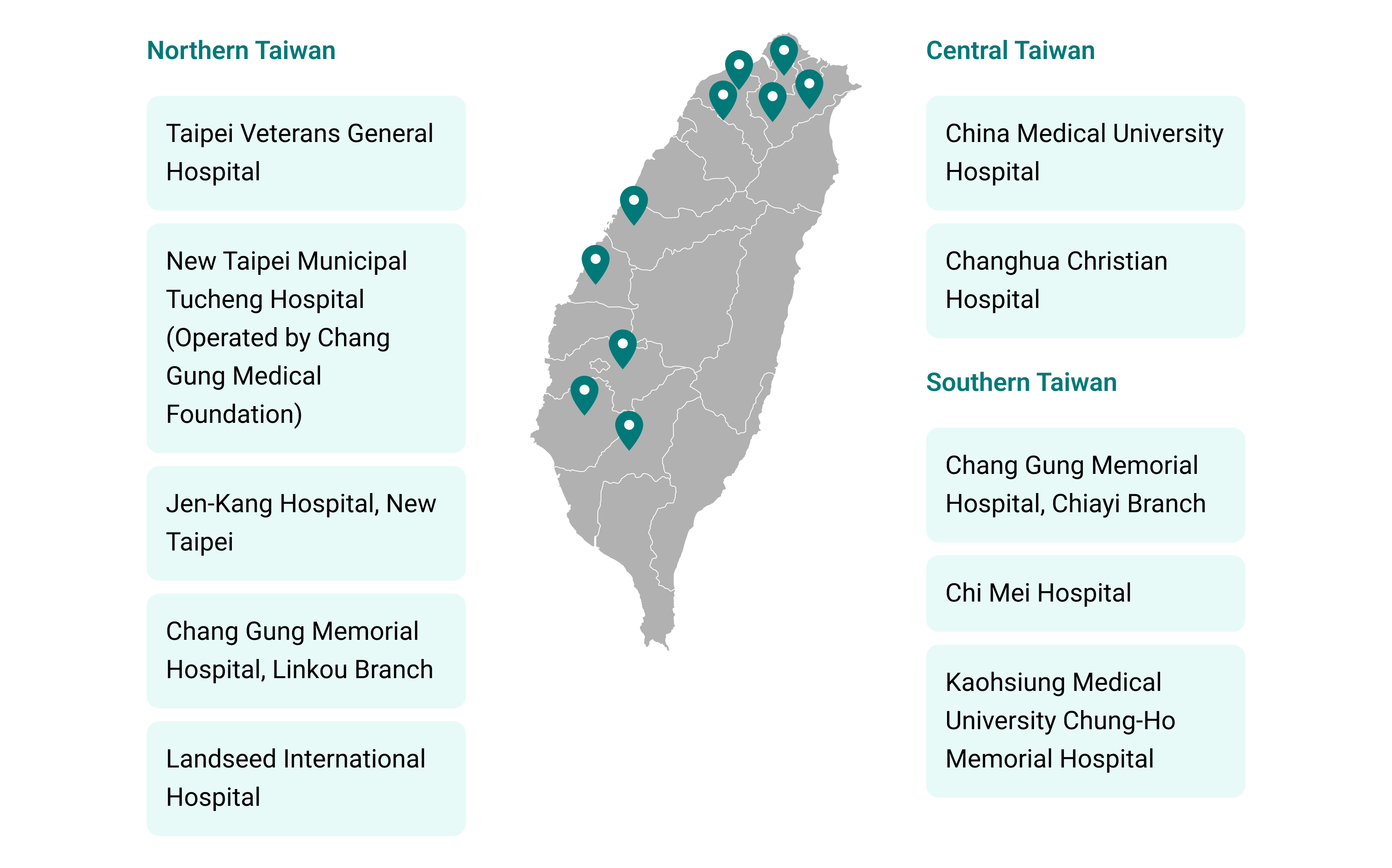

The Department of Information Management at MoHW is committed to promoting an innovative and forward-looking regulatory framework for smart healthcare. In 2024, it provided funding to 10 hospitals across northern, central, and southern Taiwan, covering medical centers, regional hospitals, and district hospitals to promote equitable access to AI technologies across all levels of care.

This six-month pilot initiative included the establishment of hospital-level task forces, regular on-site guidance, and the development of transparency mechanisms, explainability tools, and lifecycle monitoring systems. To foster public trust, each participating hospital was required to disclose its AI related policies, designated contact points, and successful use cases, allowing the public to understand how AI is applied and its impact on care quality.

Looking ahead, the Responsible AI Implementation Center will continue to foster international exchange of best practices and successful implementations, positioning Taiwan as a global leader in the safe, transparent, and ethically governed application of AI in medicine.

Figure 5. Center for Responsible AI in Healthcare

【Northern Taiwan】

Taipei Veterans General Hospital

New Taipei Municipal Tucheng Hospital (Operated by Chang Gung MedicalFoundation)

Jen-Kang Hospital, New Taipei

Chang Gung Memorial Hospital, Linkou Branch

Landseed International Hospital

【Central Taiwan】

China Medical University Hospital

Changhua Christian Hospital

【Southern Taiwan】

Chang Gung Memorial Hospital, Chiayi Branch

Chi Mei Hospital

Kaohsiung Medical University Chung-Ho Memorial Hospital

Project Team and Advisors

Project Initiator

Chien-Chang Lee, M.D., Sc.D.

Chief Information Officer (CIO) for the Taiwan Ministry of Health and Welfare (MOHW)

Professor of Emergency Medicine

Deputy Director of the Center of Intelligent Healthcare at National Taiwan University (NTU) Hospital

Acknowledgements

We sincerely acknowledge Ming-Shiang Wu, MD, PhD, Convener of the Center for Responsible AI in Healthcare and Superintendent of National Taiwan University Hospital (Taipei, Taiwan); Kenneth Mandl, MD, MPH, Professor of Pediatrics and Biomedical Informatics at Harvard University and Director of the Computational Health Informatics Program at Boston Children’s Hospital (Boston, MA, USA); and David Rhew, MD, Global Chief Medical Officer (CMO) and Vice President of Healthcare at Microsoft (New York, NY, USA), as distinguished international experts for their invaluable professional guidance and contributions to our initiative in advancing responsible AI in healthcare.

Superintendent of National Taiwan University Hospital

Director of the Computational Health Informatics Program at Boston Children's Hospital

International Partner Matching Platform

We support international organizations and innovators in identifying and connecting with the most appropriate partners in Taiwan, including the officially designated Center for Responsible AI in Healthcare.To learn more, please click here.